The proper management of patients with non-transfusion-dependent thalassaemia (NTDT) has been a challenging mission. In fact, NTDT encompasses a great variety of genetic syndromes mixed in terms of their molecular background, clinical course, and severity. Despite the availability of recent guidelines from the Thalassaemia International Federation (TIF), the strength of several treatments and recommended strategies for follow-up should be confirmed on an individual basis.1,2 Furthermore, in clinical practice, most recommendations are difficult to apply to the wide-spectrum of phenotypes, and the lack of biomarkers and/or predictive factors for the course of the disease in individual patients is evident.1 Biomarkers are of increasing importance in medicine, particularly in the area of personalised medicine. They are helpful in predicting prognosis and could be particularly useful in detecting therapeutic and adverse responses for patients with NTDT. Previous retrospective and cross-sectional studies have shown that morbidity in patients with NTDT is directly proportional to the severity of the disease, which in turn depends on the variable degree of ineffective erythropoiesis, iron loading, and peripheral haemolysis. Thus, biomarkers reflecting these primary pathogenetic parameters, such as growth differentiation factor-15, fetal haemoglobin, and liver iron concentration, have been previously used to score the clinical severity of NTDT patients, but are not all used for current management of the disease.3-5 In previous retrospective and cross-sectional studies, we showed that the soluble transferrin receptor 1 (sTfR1) level, which fully reflects the marrow erythropoietic activity, had a very high diagnostic accuracy in predicting the risk of extramedullary haematopoiesis, scoring disease severity, and patient stratification.6-8 Recent retrospective data also highlighted a relationship between sTfR1 level and some fundamental events in the management of patients with NTDT, such as age at diagnosis, age at first transfusion, age at splenectomy, and age when beginning chelation therapy.9

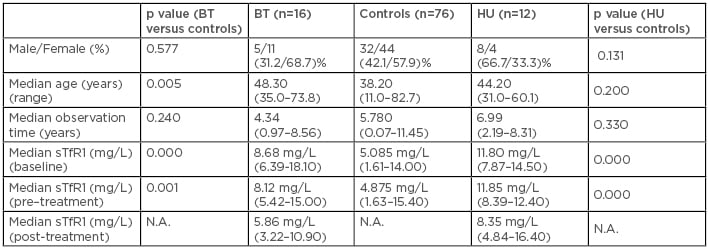

The purpose of this study was to further validate the use of the sTfR1 level as a biomarker in a longer-term prospective assessment. In a cohort of 104 patients, who have been undergoing measurement of their respective sTfR1 level approximately twice a year since 2007, we explored the longitudinal trend in sTfR1 level. Our data showed that, during the observation time, most of the patients (n=76; 73%) did not require any treatment (controls) affecting erythropoiesis and therefore maintained a steady sTfR1 level (Table 1). In contrast, 16 (15%) patients were started on regular blood transfusion therapy and 12 (12%) on hydroxyurea, because of the treatment and the prevention of anaemia and/or several complications such as extramedullary haematopoiesis, cardiomyopathy, and fatigue. Both populations of treated patients had a significantly higher level than controls at baseline (Table 1). Conversely, following the start of treatments, we observed a statistically significant reduction in sTfr1 level in both populations (p=0.00023 and p=0.005 in BT and HU group, respectively; data not shown).

Table 1: Characteristics of the evaluated population.

BT: blood transfusion; HU: hydroxyurea; N.A.: not applicable; sTfR1: soluble transferrin receptor 1.

Biomarkers are essential tools for tailoring treatment to the individual, enabling personalised medicine; in the field of NTDT, they could allow more efficient management and interference with the natural history of the disease. Overall, these prospective data further reinforce the need for testing the sTfR1 level in patients with NTDT following first diagnosis, as its increased level may provide an early indicator of the need for treatments to reduce and/or prevent several NTDT complications linked to anaemia and/or expanded erythropoiesis.